Document semantic search and categorization

Document semantic search and categorization

The Client

A large Europe based B2B company that provides a secure platform to store, manage and operate the pool of corporate documentation together with the fragmented databases, having multiple tiers of data as to its publicity and sensitivity. Therefore the company offers a deep and flexible permission structure for both documents and data access that’s managed centrally by the platform.

The Problem

Enormous amounts of data spread across countless documents and gigabytes of variously structured and semi-structured data in a pack of databases make it quite challenging to find the answers quickly and effectively. Not only does it force the data operator to know where to look for, it’s also often a matter of effective querying. An operator does not have to know the query language, but still they have to know the tables or data structures to look for, the documents to search in and how to merge the gathered information into a meaningful response.

The client needed a solution that allows them to structure and arrange their data according to the content and simply formulate a prompt and receive a summary or a detailed selection of data that gives the exact info on the topic.

Challenges

Data security and compliance

As it was a multi-tenant B2B platform, the question of data isolation was top priority. No entity should face the documents it does not own. At the same time the product should be able to maintain the customer’s permission policy and remain compliant with local regulations.

Variety of data sources and formats

The system is expected to be able to operate structured, semi-structured and unstructured data using the original sources. A customer would link their data sources to the platform and be able to operate it from the platform’s web interface or via the API.

API integration with 3rd party

Alongside with a provided web interface the client wanted to have an API based approach in order for their customers to be able to have a natively looking integration with their products, therefore be able to interact with the AI platform from within their own in-house UIs.

The Solution

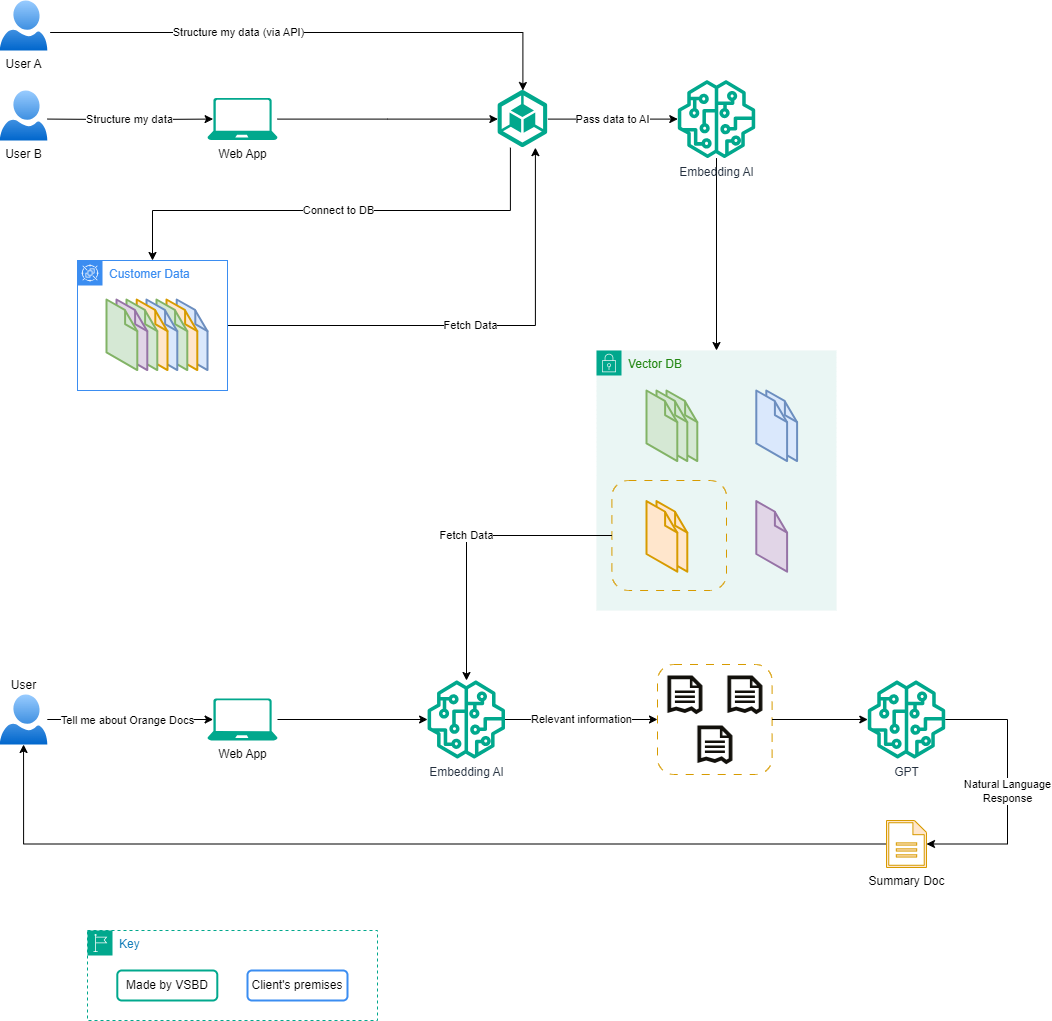

The classic web application played the role of the primary UI. A customer had an option to choose whether they wanted to work using our web interface or integrate via API. This resulted in an authentication/authorization module which offered both local and remote user management (SSO, MS AD) as well as service-to-service authorization (OIDC). We leveraged the concept of data connectors expanding the pool of supported data sources with a new connector implementation. This way we managed to support a couple of file storage sources (Sharepoint, s3) as well as main SQL engines and a couple of NoSQL common solutions (like MongoDB or Cassandra). Our internal data spaces only contained the metadata of the sources and fragmented pieces of data that would not allow useful extraction in case of being compromised.

The AI part of a solution consisted of two parts. The categorization task was handled by a series of the classic Deep Learning models with a combination of feature engineering (document length, taxonomy) and data embedding (content-wise) which yielded in document or table labeling. Here the combination of binary and multiclass approach was used, meaning that there was one model per base class, answering whether the document falls into this base category, and underneath it tried to identify the sub-categories within the class. In that case adding a new category would require an extra similar model but not affect the rest of categories in most cases, while rearranging subcategories would require retraining within a single model.

Another part was about context search, which relied on a combination of a Neural Network Model working with a Vector DB to convert and process the prompt and retrieve the relevant data. After that the result was passed to a self-hosted entity of an NLP LLM (GPT) to form a human-friendly response with references to the data sources. The system provides the flexibility to set the expected degree of similarity between the search query and the response and generate multiple responses letting the user pick their best option afterwards.

In order to ensure security all the data belonging to different entities was organized in a structure of both logically and physically separated entities. Access was controlled by tenant management implemented on the authorization module side.

Obstacles

Feature engineering

The big challenge was to figure out the possible features for very differentiated data sets. Fortunately we were able to figure out a couple of groups of data for which we came up with a set of their own features. For files it can be the document size, taxonomy, names and numbers while for tables it can be the amount of columns and their types, their relations and naming etc. As a result we had a couple of groups of features for different types of data sources. Since the features relied on the content, it required a certain data preprocessing before pushing it into the ML model.

Latency

We made a set of experiments with different vector databases (Chroma, Milvus) as well as with certain managed services (Zilliz, Pinecone) and ended up with a self-hosted solution having the optimal dimensionality that provides a reasonable accuracy (95%+) and consistent performance minimizing the waiting time to a couple of seconds at its most.

Data volumes

As the data volumes grew to hundreds of Gigabytes and had a tendency to go to a Terabyte scale it was crucial to consider data management optimizations, such as data sharding, parallel processing and choose the optimal parameters of data storage. Together with this we took the approach of upfront async data upload and processing based on the evaluated chance of this data being required for processing by the customer. The specificity of search allowed us to evaluate the data as hot-warm-cool-cold to understand the likelihood of it being involved into search and therefore exclude its metadata from search to both speedup the process and release the unused data volumes.

The Value

The client has received a huge competitive advantage of their product by offering intellectual features bringing the work with the corporate data to a new level. The solution improved the overall user experience, decreased the processing time and raised the quality of data processing, summarization and search. Working with data changed from engineering-style processing to communication-like using LLM for chat based prompts and responses. The accuracy of responses is verified by the references to the fragments of source documents or data which minimizes the risk of misleading.

VSBD - Software Development

a b c d e f g h i j k l m n o - Do not remove from template!!! it is important to support different fonts

All Rights Reserved | VSBD