Surveillance Cameras

Pattern recognition on surveillance cameras

The Client

Automated surveillance systems provider operating in a couple of countries across multiple continents. The client provides a number of intelligent solutions meant to bring surveillance to a new level. Human-based monitoring and static cameras to be replaced with surveillance systems with a combination of static and moving cameras, bodycams and surveillance drones united into a common ecosystem with the capabilities of automated anomalies detection and reaction to them (like notifications or alerts).

The Problem

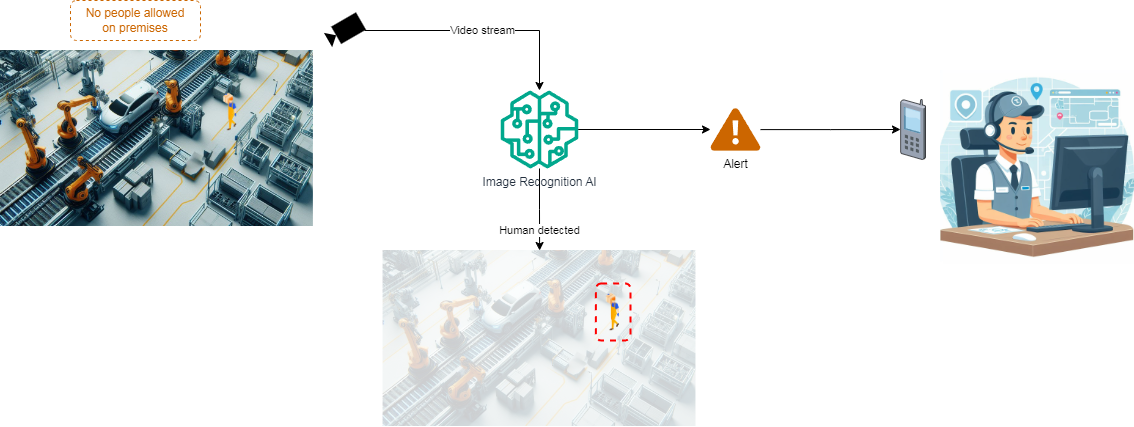

All the surveillance systems of a single premise form a surveillance family. All the families are operated from a SaaS solution where each customer can operate their family, watch videos, configure cameras, add or remove family members, manage operators etc. Our task was to build a ML based solution that would analyze the incoming video content and identify various occurrences the nature of which depends on the location. As an example it can be perimeter violation, safety measures violation (employees not wearing safety belts while performing high altitude works), detecting wild animals in the perimeter, weather phenomena etc. Each event should trigger its own reaction pipeline.

Challenges

Unlimited use cases

For every location there would be a dedicated set of anticipated patterns whereas there’s no complete set of use cases for all possible locations.

Latency

It was very important to react to events as fast as possible. Ideally the system should react in a near real time manner which was really challenging with the amount of data to be processed simultaneously.

Data volumes

Each premise counted tens of cameras streaming all the time. This creates enormous volumes of HD video content to process. Together with multi-tenancy it brings up a serious challenge of scaling and processing optimization to achieve reasonable infrastructure expenses and reaction time.

The Solution

The task is a classic classification problem. It may seem that it has to do with time-series data but in fact it’s about yes-or-no for a dedicated media fragment. Since each premise presumed quite different use cases to focus on, it was pretty pointless to build a one-for-all neural network. Not only would it be too heavy and slow to process a huge variety of scenarios, but also it would perform a lot of obviously irrelevant work for cases that never take place for this or that location. As a result a set of ML models was built to cover a group of events each, therefore making some models more common while others more specific. There still would be a fraction of use cases irrelevant to this or that particular family of surveillance cameras but it was still a fair balance between the processing redundancy and infrastructure complexity. A series of ML models worked independently and the notification component was configurable to produce events based on their results. Irrelevant results were simply ignored.

To ensure proper reaction time and load management it was important to preprocess video content before sending it to the AI component. For the input video we could reduce resolution, make it grayscale, reduce the framerate and more. The preprocessing specifics depended on the type of expected event, camera location and distance to the observed object. So it was impossible to put this onto the AI component itself, because the AI component was meant to be common for various cameras even in different locations. So we built a standalone preprocessing component which could accept the video and the preprocessing parameters and then pass the processed content to the AI component which consists of a number of ML models built on typical for this type of task Convolutional Neural Networks.

Obstacles

Video overall processing time

Although the Neural Network processes video faster than it’s recorded, due to the large amount of video streams and unbalanced use cases it sometimes happens that one Neural Network has much more content to process than another. To address this we had to scale the service based on the video files queue length.

Training sets

Due to specificity of use cases it was really difficult to prepare large enough training sets. To address this we went on with a couple of tricks including data augmentation, k-fold cross validation and datasets synthesis using generative AI.

Cost optimization

On the bright side the solution does not require additional massive data storages but it is very compute dependent. With multi-tenancy it incurs massive content to process that requires significant computational units. To address this we tested various technical solutions, including bespoke ones and managed services (such as SageMaker on AWS or Azure Cognitive Services). But we ended up with a set of self-managed Kubernetes clusters utilizing the benefits of reserved capacity discount together with an opportunity to scale down certain models to zero when there’s no demand at a certain period of time.

The Value

The intelligent solution turned out to be very flexible due to its distributed architecture and thanks to the elasticity of cloud services. It allowed to improve the quality of video monitoring and release the resources dedicated to manual monitoring to other activities. The balance of video preprocessing, queuing and instance scaling with just the right performance helps the solution to stay within the reasonable pricing providing a close to real-time notification.

VSBD - Software Development

a b c d e f g h i j k l m n o - Do not remove from template!!! it is important to support different fonts

All Rights Reserved | VSBD